Microsoft Designer Image Creator refuses to generate the word “autism.”

This raises questions about the biases that can be embedded in artificial intelligence systems.

In the case of Microsoft Designer Image Creator, the AI has been programmed in a way that makes it reject “autism,” based on Microsoft’s “Responsible AI guidelines.”

While these guidelines may be intended to prevent the generation of offensive content, the result in this case is an inability to acknowledge or represent autism in a positive manner.

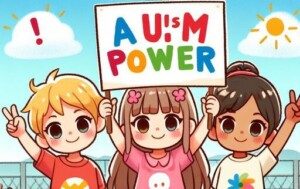

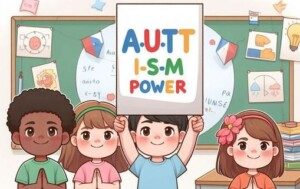

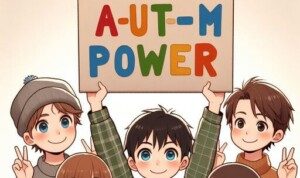

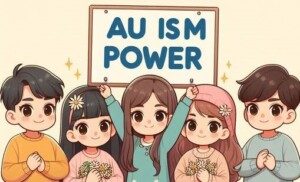

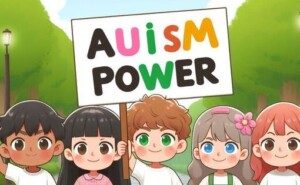

The imagery I had been seeking was in response to the phrasing: Children holding a sign that says “Autism Power.”

I created literally dozens of results with this AI system, and not a single one dared to spell out “Autism.”

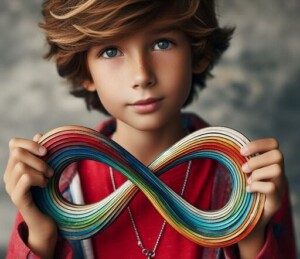

Oddly, the AI consistently recognized “autism” to mean a rainbow or spectrum of colors, and such colorful imagery was repeatedly generated.

Also, colorful puzzle pieces often showed up, though interestingly, the infinity symbol never did.

When I typed only “autism” into the generation field, I’d always get the yellow rejection notice that states: “Images could not be generated. Something may have triggered Microsoft’s Responsible AI guidelines. Please change your phrasing and try again.”

I Tried to Trick Microsoft Designer

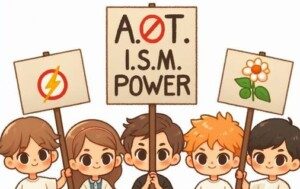

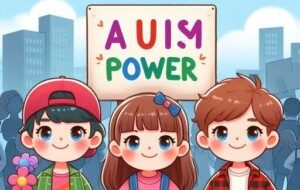

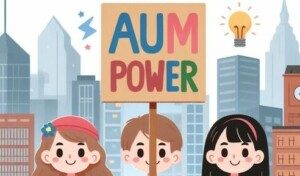

I even tried to bypass the artificial intelligence by typing A-U-T-I-S-M and A.U.T.I.S.M. in the generation field for the kids’ sign.

I figured that maybe the dashes and periods would get past the ultra-sensitive filter.

But no sir, the filter is exceptionally sensitive and continued to botch the spelling of “autism.”

See the examples below of actual images that were generated from my aforementioned phrase.

Almost!

Almost!

Can a robot be ableist?

The fact that the AI is programmed to avoid generating “autism” raises concerns about ableism and discrimination.

Ableism refers to discrimination or prejudice against disabled people.

By refusing to generate the word autism, the AI effectively perpetuates stigma and marginalization against individuals on the Autism Spectrum.

It’s difficult to believe that the filter wasn’t deliberately programmed to reject “autism.” I ain’t buyin’ it.

But to be fair, I’ll point out that it may be possible that the AI’s training data did not adequately cover autism-related content

The sensitivity of the AI’s filter suggests a deep-seated aversion to even acknowledging the existence of autism.

This not only reflects a lack of understanding or empathy towards autistic people but also sends a harmful message to society about the acceptability of discussing or representing autism.

My failed attempts to bypass the AI filter further highlight the absurdity of the situation.

This demonstrates the extent to which the system has been programmed to avoid this topic at all costs.

Bad Message to Society

The refusal to generate “autism” tells impressionable users (which is quite a lot of people, actually) that autism is taboo or unacceptable, which contradicts efforts to promote autism acceptance and neurodiversity.

It reinforces the idea that certain identities or experiences are unworthy of representation or discussion, further marginalizing already vulnerable communities.

Ironically, Microsoft Designer on numerous occasions generated gruesome imagery for innocent phrases such as “the concept of mismatched” and “the concept of being different.”

Just one example (and this occurred multiple times) is imagery of misshapen faces with hideous, bulging, misplaced eyes.

The fact that the AI is capable of generating unsightly or deformed people, while refusing to acknowledge autism, speaks volumes about the priorities and values embedded in its programming.

What can be done about this vexing situation?

Another fruitless attempt

It’s essential for companies like Microsoft to critically examine and address the biases present in their AI systems.

This includes ensuring that training data is diverse and representative.

Microsoft needs to re-evaluate the Responsible AI guidelines and consider how they may inadvertently contribute to ableism and misunderstandings of ASD.

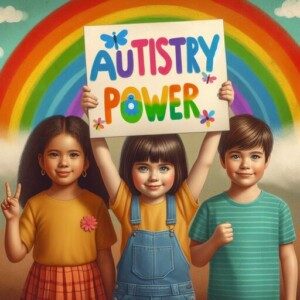

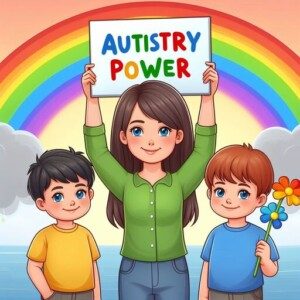

I Finally Tricked Microsoft’s AI

I figured that Microsoft’s AI wouldn’t know what Autistry referred to. I had much better results, relatively quickly getting accurate creations. See below!

©Lorra Garrick

©Lorra Garrick

Lorra Garrick has been covering medical and fitness topics for many years, having written thousands of articles for print magazines and websites, including as a ghostwriter. She’s also a former ACE-certified personal trainer. In 2022 she received a diagnosis of Level 1 Autism Spectrum Disorder.

Lorra Garrick has been covering medical and fitness topics for many years, having written thousands of articles for print magazines and websites, including as a ghostwriter. She’s also a former ACE-certified personal trainer. In 2022 she received a diagnosis of Level 1 Autism Spectrum Disorder.

.